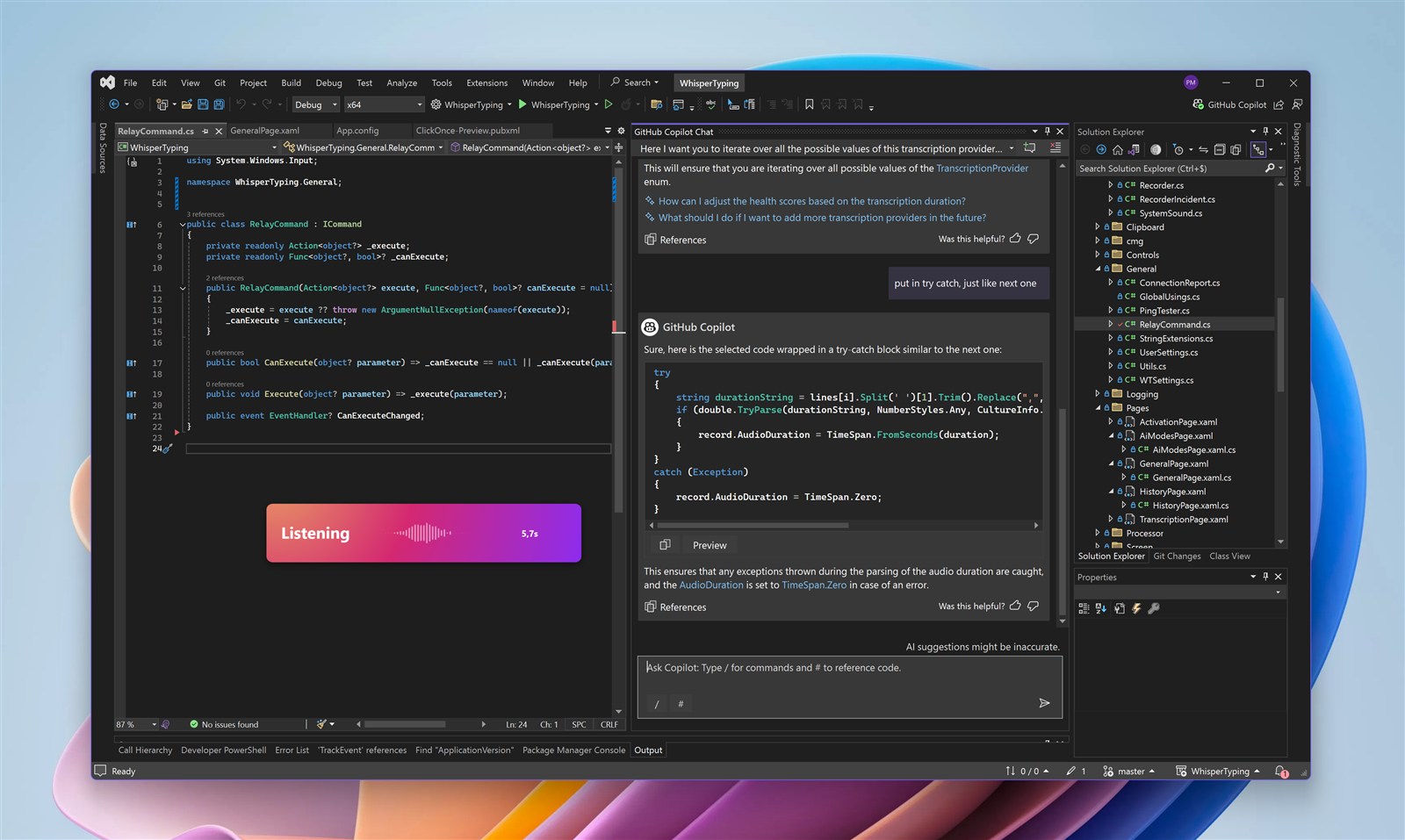

GitHub Copilot is the AI pair programmer millions of developers use daily. Copilot Chat lets you ask questions, explain code, and request changes - but typing those requests slows you down. With WhisperTyping, you speak to Copilot naturally, right from any IDE. Ready to vibe code?

GitHub Copilot Chat

Copilot Chat is built into VS Code, Visual Studio, and JetBrains IDEs. You can ask it to explain code, fix bugs, write tests, and refactor - all through natural language. Voice input makes this conversation flow even more naturally.

Always Ready When You Are

Here's what makes WhisperTyping essential for Copilot users: your hotkey is always available. Whether you're reviewing code, testing your app, or reading documentation - hit your hotkey and start speaking. Your next prompt is ready to go before you even switch windows.

See a bug while testing? Hit the hotkey: "The login form isn't validating email addresses correctly." By the time you're back in your editor, your thought is captured and ready to paste into Copilot Chat.

Works in Every IDE

WhisperTyping works with Copilot Chat in all supported environments:

- VS Code: The most popular Copilot environment

- Visual Studio: Full support for .NET developers

- JetBrains IDEs: IntelliJ, PyCharm, WebStorm, and more

- GitHub.com: Copilot in pull requests and issues

Custom Vocabulary

Speech recognition can struggle with technical terms. WhisperTyping lets you add your stack to its vocabulary:

- Framework names:

React,FastAPI,NextJS - Functions and hooks:

useState,useEffect,handleSubmit - Your project's class names, variables, and conventions

Screen-Aware Transcription

WhisperTyping reads your screen using OCR. When you're looking at code, it sees the same function names, error messages, and variables you do - and uses them to transcribe accurately.

Why Voice for Copilot?

Copilot Chat works best with clear, contextual requests. Voice makes it effortless:

- Explain code while looking at it: "What does this regex pattern do?"

- Request changes naturally: "Add null checking to this function"

- Ask for tests: "Write unit tests for the authentication module"

- Debug issues: "Why might this be returning undefined?"